As the capabilities of Generative AI and Large Language Models (LLMs) continue to rapidly evolve and improve, the foundational architecture of automated enterprise translation is also undergoing significant transformation.

The traditional translation automation workflow that has been in place for the past two decades has largely been an evolution of the broadly-used professional human translation workflow process known as “TEP” – Translate, Edit, Proofread.

The adoption of Machine Translation (MT) largely supported the partial automation of this traditional workflow. In this updated partially-automated workflow, “Translate” is supplanted by an automated MT step, whereas “Edit” becomes what’s known as MTPE – human post-editing of the MT-generated initial translation. Proofreading largely remained as a solidly human step, intended to ensure expected high-levels of quality and adapted fit-for purpose final translations.

This workflow was well suited for a world in which Translation Memory matches and Machine Translation systems generated translations segment-by-segment, with little or no document-level context.

At the time, the vast majority of MT-generated translations contained significant translation errors and fluency issues requiring editing by translation professionals, incorporating context, and ensuring enterprise-grade adherence to terminology and style and document-level consistency.

Traditional automated metrics and measures for assessing the quality of MT-generated translations were largely designed and developed for the capabilities and limitations of these types of MT systems and MTPE workflows.

Legacy lexical-based metrics such as BLEU, METEOR and ChrF, as well as modern neural metrics such as COMET, were largely designed to perform segment-by-segment-level analysis and to detect and quantify linguistic translation adequacy and fluency errors.

These metrics were largely sufficient for effectively benchmarking alternative MT systems and selecting the system that minimizes linguistic errors.

Referenceless Quality Estimation (QE) systems such as Phrase QPS and COMET-QE were also largely designed for this scenario, and for enabling efficiencies from the ability to skip the MTPE step for segments where there is high-confidence that MT is in fact linguistically error-free.

The challenge: Automated evaluation in the new GenAI MT landscape

While the Neural MT models of the past ten years greatly improved in both accuracy and fluency, they did not fundamentally alter the underlying process of this partially-automated TEP workflow. The introduction of LLM-generated translations, along with additional new Generative AI (GenAI) capabilities, is, however, significantly changing this foundation.

We’ve moved beyond simple segment-level translations to a landscape in which Large Language Models (LLMs) generate context-aware, document-level translations, striving to align with enterprise language preferences like terminology, branding, style, and tone.

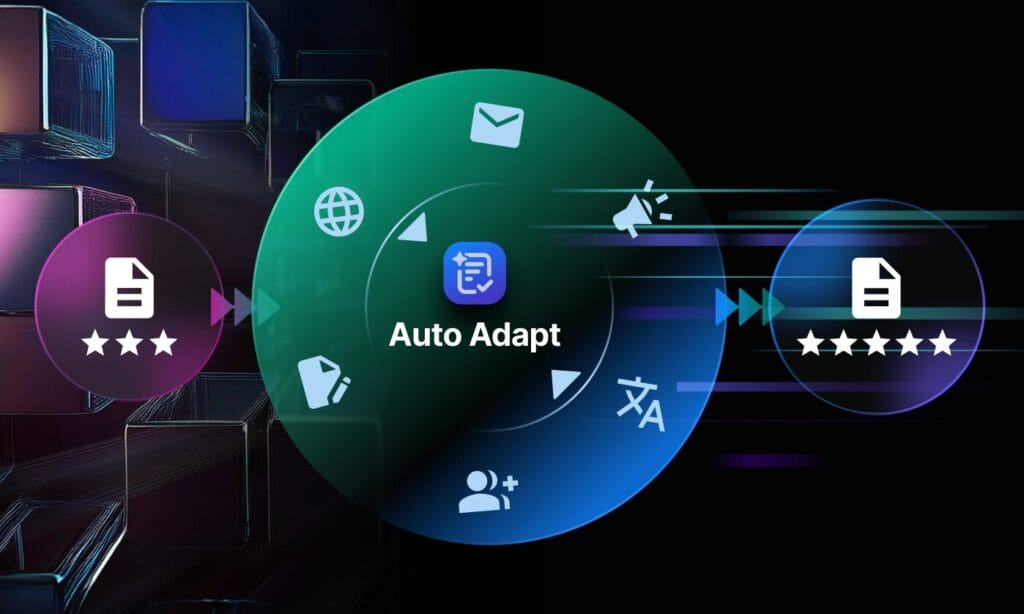

Furthermore, new capabilities such as Phrase’s AutoAdapt transform workflows to include document-level target-language adaptations, automating much of the work previously performed by expert human reviewers.

This shift, while exciting, presents significant challenges for traditional automated translation quality evaluation metrics, including modern metrics such as COMET and Quality Estimation (QE) systems such as Phrase QPS. Now that GenAI can largely automate the process of generating targeted “fit for purpose” translations – how do we verify and ensure the quality of these translations?

The transition from Neural Machine Translation (NMT) to GenAI-driven translation introduces complexities that older automated evaluation methods struggle to handle. While NMT focused on semantic accuracy and grammatical correctness at the segment level, LLMs can now target and ensure document-level consistency and adherence to specific enterprise guidelines.

This means traditional metrics, often designed for sentence-by-sentence analysis, may miss crucial aspects of overall translation quality, such as terminology consistency and tonal alignment.

Tackling the challenge: New methodologies for quality assessment

To address these new challenges, traditional automated MT evaluation measures must be augmented with new and refined evaluation methodologies.

Our AI Research team at Phrase has been tackling these challenges head-on, exploring several new complementary approaches to automating evaluation processes, suitable for these new GenAI-driven workflows. These are largely designed to augment rather than replace our existing primary automated quality metrics COMET and Phrase QPS.

Dedicated targeted quality measures for modules such as Auto Adapt:

New workflow modules such as Phrase’s AutoAdapt are specifically designed to transform document-level translations in ways that improve “fit for purpose” translation, ensuring consistent translation of pronouns and terminology, as well as formality and tone.

To assess the effectiveness and production readiness of AutoAdapt, we developed several dedicated quality measures, specifically designed to capture the extent to which these targeted text transformations accomplish their adaptation targets.

These measures calculate targeted statistics that measure terminology adherence and consistency across the document. They also track consistency in generating pronouns of the correct gender and formality, and other specific translation statistics relevant to specified enterprise preferences.

While applying these measures in isolation may not provide a complete picture to the end customer, they are highly effective at contrastively assessing the impact and production readiness of modules such as AutoAdapt.

Contrastively applying these measures to both versions of a target text – the input and the output of AutoAdapt – allows us to confirm and ensure that the transformations performed by AutoAdapt indeed improved the “fit for purpose” nature of the document.

Another advantage of these measures is that they are largely computable at runtime, similar in nature to QE metrics. This enables their potential future use as quality gate-keepers in automated workflows, flagging and channeling documents that require further attention for human review.

The broader research community has also been adopting similar approaches. For example, the 2023 WMT Shared Task on Machine Translation with Terminologies adopted a similar term consistency measure (TC) and used it as a primary measure for evaluating task submissions for this dedicated task.

Human editing and lexical metrics:

While the above new targeted measures are useful at translation time, where “gold” reference translations are unavailable, they can be augmented and supplemented by other measures in benchmarking and offline quality testing scenarios.

In these scenarios, carefully edited final human translations can be generated and used as target references. Traditional lexical metrics such as Translation Edit Rate (TER) and Character n-gram F-score (ChrF) can then be employed to measure the “distance” between various intermediate translations and the final reference translations.

This can validate and confirm that the transformations performed by workflow steps such as Auto Adapt perform as intended and dramatically reduce the editing work required to reach the reference translation.

Contrastive A/B testing:

Another increasingly adopted approach to contrastively assessing the relative quality of GenAI generated translations is to ask human experts to assess them holistically. Experts compare the two complete translations side-by-side, and are asked to indicate which one is better.

This approach can be used in both offline benchmarking scenarios, as well as in live workflows, where experts can provide their preferences in selecting a final translation among several alternatives.

LLM as a judge:

Finally, the emerging capabilities of recent LLMs have opened up new opportunities to use the LLMs directly for automated assessments in various tasks. This setup has recently been termed as “LLM-as-a-judge”.

Using relatively small amounts of data collected via contrastive human A/B testing as above, LLMs can then be specialized to perform such A/B contrastive assessments themselves. By using In-Context Learning (ICL), we can prompt an “LLM as a judge” with content preferences and human examples, enabling it to evaluate translations based on those specific criteria.

New agentic workflows:

To harness the potential of these emerging and existing assessment methodologies, more flexible workflows based on agentic AI modules are now actively being explored.

These workflows can flexibly chain together many or all of the approaches outlined above into a comprehensive approach that can verify and ensure document-level quality, consistency, and alignment with enterprise standards.

Conclusion: Embracing the future of automated translation quality evaluation

The rise of GenAI and LLM-powered translation is not just changing how we produce multilingual content; it is fundamentally reshaping how we need to measure its quality. As translation shifts from segment-level outputs to sophisticated, document-level results that reflect enterprise-specific language, our evaluation methods must keep up.

This is why a single metric or legacy approach is no longer enough. Combining targeted quality measures, human-led comparative assessments, and new uses of LLMs as evaluators provides a more complete view of “fit-for-purpose” translation performance.

Together, these methods give us a practical way to assess whether AI-driven translations truly meet the standards of consistency, accuracy, and brand fit that global businesses expect.

Looking ahead, these approaches will keep evolving alongside the multilingual content-generation technology itself. By putting this multi-layered framework in place now, we can ensure that automated translation quality continues to meet real enterprise needs as GenAI capabilities grow.

Ready to raise the bar for GenAI-powered translation?

Discover how Phrase combines AI innovation with enterprise-grade quality control. Explore solutions like Auto Adapt, Phrase QPS, and our evolving agentic workflows to deliver translations that perform where it counts.